Latest

5064 Stories

Grok 3’s Brief Censorship of Trump and Musk Sparks Controversy

Who knew AI could play favorites? Artificial intelligence was supposed to be neutral, right? Just pure cold logic with no...

Google Veo 2 AI Video Model Pricing Revealed at 50 Cents per Second

Google has long been a pioneer in artificial intelligence, consistently leading advancements and breakthroughs through its dedicated AI research divisions,...

Apple Launches iPhone 16e in China to Compete with Local Brands

Apple is preparing to launch the iPhone 16e in China, aiming to regain its competitive edge in one of the...

Trump Administration Reportedly Shutting Down Federal EV Chargers

The General Services Administration (GSA), the federal agency responsible for managing government buildings, is reportedly planning to shut down all...

US AI Safety Institute Faces Major Cuts Amid Government Layoffs

The US AI Safety Institute (AISI), a key organization focused on AI risk assessment and policy development, is facing significant...

HP Acquires Humane: What It Means for the Future of AI Wearables

HP’s recent $116 million acquisition of Humane has sent ripples through the tech industry. Once valued at $240 million, the...

Did xAI Mislead About Grok 3’s Benchmarks? OpenAI Disputes Claims

Debates over AI benchmarks have resurfaced following xAI’s recent claims about its latest model, Grok 3. An OpenAI employee publicly...

Nvidia CEO Jensen Huang says market got it wrong about DeepSeek’s impact

Nvidia founder and CEO Jensen Huang said the market got it wrong regarding DeepSeek’s technological advancements and its potential to...

Apple Ends iCloud Encryption in UK After Government Demands

Apple has confirmed the removal of Advanced Data Protection (ADP) for iCloud backups in the UK following government demands for...

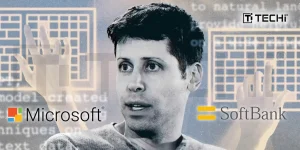

OpenAI to Shift AI Compute from Microsoft to SoftBank

According to The Information Report on Friday, OpenAI is forecasting a significant shift in the next five years around who it...

OpenAI Blocks Accounts in China & North Korea Over Misuse

OpenAI has announced the removal of user accounts from China and North Korea. OpenAI blocks accounts because the company believes...

Meta Faces Legal Battle Over AI Training with Copyrighted Content

Meta is under intense scrutiny after newly unsealed court documents revealed internal discussions about using copyrighted content, including pirated books,...

Page 7 of 422